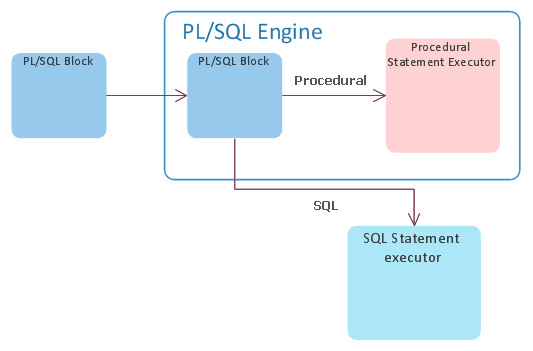

In our second and last part about association of statistics to PL/SQL functions in Oracle we’ll take a look at how to estimate I/O and CPU usage.

First we’ll try to measure I/O. Let’s have a procedure discount from an online shop. All that it does is that I takes order ID and applies requested discount on it. For I/O measurement we’ll use handy PL/SQL program called mystats which is variation on Jonathan Lewis's SNAP_MY_STATS package to report the resource consumption of a unit of work between two snapshots. You can get it here: https://github.com/oracle-developer/mystats.

Simple example of usage should look like follows:

set serveroutput on

/

begin

mystats_pkg.ms_start;

end;

/

begin

discount(1,20);

end;

/

begin

mystats_pkg.ms_stop(mystats_pkg.statname_ntt('consistent gets','db block gets'));

end;

/

rollback

/

/

begin

mystats_pkg.ms_start;

end;

/

begin

discount(1,20);

end;

/

begin

mystats_pkg.ms_stop(mystats_pkg.statname_ntt('consistent gets','db block gets'));

end;

/

rollback

/

Output will look something like this:

From report we see that our measured I/O is 14. This is very simple and probably not very representative test. It would be a good idea to give procedure more iterations over different data and then divide results by number of iterations.

Now that we now how much I/O will function usually consume, we can focus on CPU. For CPU estimate we will use PL/SQL function called DBMS_ODCI.ESTIMATE_CPU_UNITS (https://docs.oracle.com/cd/B19306_01/appdev.102/b14258/d_odci.htm#i996771) which returns the approximate number of CPU instructions (in thousands) corresponding to a specified time interval (in seconds).

We can do something like this with following output:

set serveroutput on

/

declare

v_start PLS_INTEGER := DBMS_UTILITY.GET_TIME;

v_end PLS_INTEGER;

begin

mtg.order_pkg.discount(1,20);

v_end := DBMS_UTILITY.GET_TIME;

dbms_output.put_line('Time:'||to_char((v_end-v_start)/100,'999,999.999'));

DBMS_OUTPUT.PUT_LINE(ROUND(1000 * DBMS_ODCI.ESTIMATE_CPU_UNITS(v_end-v_start)/100,0));

end;

/

rollback

/

/

declare

v_start PLS_INTEGER := DBMS_UTILITY.GET_TIME;

v_end PLS_INTEGER;

begin

mtg.order_pkg.discount(1,20);

v_end := DBMS_UTILITY.GET_TIME;

dbms_output.put_line('Time:'||to_char((v_end-v_start)/100,'999,999.999'));

DBMS_OUTPUT.PUT_LINE(ROUND(1000 * DBMS_ODCI.ESTIMATE_CPU_UNITS(v_end-v_start)/100,0));

end;

/

rollback

/

Again I would advise you to give it more iterations to get nicer picture a use average value.